**This is an old revision of the document!**

MIDI

A long time ago, analogue synthesizers got invented, and they were probably mostly intended as single instruments, much like a piano or organ. Like a player-piano, people eventually invented ways to automate synthesizers so that they a user could sequence a set of notes into the synthesizer and have it play it back to them. While that synthesizer played one part, the user would go to a second synth and play the accompaniment. Artists being what they are, two synths were hardly enough, and performers quickly started one-person bands that consisted excluisvely of synthesizers.

There were two serious issues: the synthesizers had to keep time, so internal clocks were installed. But people found that these clocks had a tendency to drift out of sync with one another, so that even if you managed to start all of your synths at the right foot-tap, by the end of a six minute song, any automated sequence would be out of sync with any other given sequence.

The other problem was that there were purely physical logistical problems with one person trying to play and trigger eight different synths to do different parts over the course of a song. It just wasn't practical.

The solution that arose was MIDI: the Musical Instrument Digital Interface.

MIDI Data Streams

You might think of MIDI as a very simple network, like the internet, without routers or switches or DNS servers. That is, all MIDI signals are sent to all clients, and it's up to the client to choose what signal to listen to. Imagine an office with a few employees in it. The employee sitting closest to the server is employee #0, the next is #1, and so on. Each morning, employee 0 copies a dump of all company email off the server onto a thumbdrive and takes it to his or her computer, takes a copy of all email addressed to Employee 0, and passes the thumbdrive on to Employee 1. Employee 1 copies all email address to Employee 1, And so on down to the last employee.

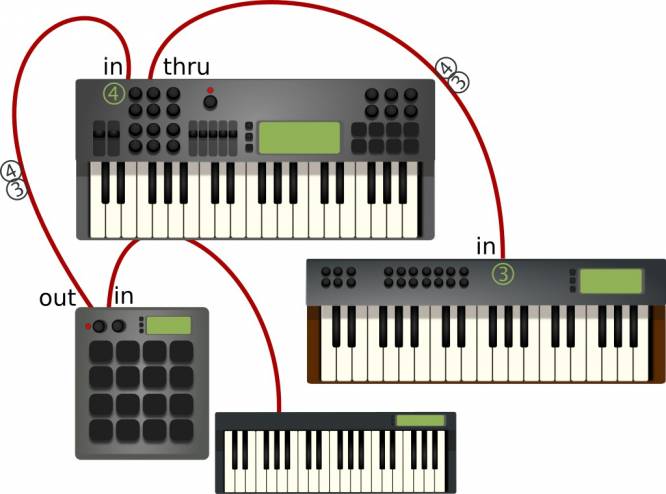

That is, more or less, how MIDI works. MIDI cables are strung from one device (perhaps a sequencer) to another (for instance, a synthesizer, and furthermore, one set to play a warm synth pad), and then from the first synth to a second synth (imagine this one is set to play the sound of a piano), and so on.

If you compose a tune on the sequencer, you can “play” both synthesizers at once by sending your MIDI data over the cable. If the first synth is set to listen in on Channel 3, and you send some chords along Channel 3, then when you play your composition, you will hear warm chords on the first synth. If the second synth is set to listen in on Channel 4, and you send a melody over Channel 4, then when you play your composition, you'll hear your melody played by a synthesized piano (because that is what we set the second imaginary synth to).

If you suddenly realised that it would sound much better with the chords played by a piano and the melody by a synth pad, all you have to do is tell the first synth to listen in on Channel 4 and the second synth to listen for Channel 3.

Simple as that.

Three Types of MIDI Ports

There are three types of MIDI ports: In, Out, and Through (sometime abbreviated to “Thru”). They each have specific functions. On any given MIDI-capable unit (where a “unit” might be a synth, or sequencer, or sampler, or FX box, MIDI USB interface, MIDI patchbay, and so on):

- MIDI Out sends MIDI data generated by that unit. This is an important distinction; the MIDI Out port never sends “left-over” data that the unit has received in its MIDI In port.

- MIDI In accepts MIDI data generated by something else. Whether or not the unit listens to that data, and which channel the unit listens on, is up to the user.

- MIDI Thru indiscriminately echoes everything received from the MIDI In port.

As an example, a likely MIDI setup might have an Emu sequencer programmed with the parts for several different instruments. Each instrument is defined by a MIDI channel. A cable is connected to the sequencer's Out port, to a Korg's In port. The Korg listens in on channel 1 and plays the part found there. A cable connected to the Korg's Thru port takes all the MIDI data (channel 1 inclusive) and sends it to a Roland, the next synth in line. The Roland listens for channel 2 and plays whatever it finds there. A cable connected from the Roland's Thru port sends all data (channels 1 and 2 inclusive) to an Alesis drum machine, which listens to channel 3 and plays that.

Notice that MIDI Out was only used once in this example, and the bulk of the transport layer consists of In and Thru.

Computer Implementations of MIDI

MIDI worked out so well for the synth world that when computers started taking over the workload of electronic music, MIDI was reimplemented internally rather than replaced with something new. Anything you know from your experience with hardware synths, or from what you have read about it, applies directly to computer music. The only difference is that the synths are now applications, and the cables are virtualised and made of pixels.

For example, if you have a sequencer software (such as Qtractor or Muse or JACK routing system, connect soft synths set to listen on some MIDI channel, and, just that quickly, you're making music!

If you want to combine computerised MIDI with traditional MIDI, you can do so with a MIDI interface. These plug in to a computer (usually via USB, but sometimes with IEEE 1394 or “Firewire”) and send and receive MIDI data so that you can use hardware synths with a software sequencer, or a hardware sequencer with soft synths, and so on.

MIDI Driver for JACK

Normally, the internal computer MIDI data is handled by a driver provided by ALSA (the same project responsible for driving sound). This makes some logical sense, since when using MIDI you are probably using MIDI for sound. But with the rise of JACK, MIDI as an ALSA technology became, for most people, a technology ripe for deprecation.

There are use-cases for ALSA's MIDI driver (for instance, if you are just listening to MIDI files for fun, you would not have any good reason to start JACK, or if you are using LMMS or Ardour version 4 or above without JACK) but if you are using DAWs and soft synths and effects, then the MIDI driver you probably want to use is not the ALSA driver because, just as with ALSA sound, ALSA MIDI cannot connect to JACK devices.

The two are, from the musician's perspective, two separate systems.

If you are using JACK to make your music, then you should drive MIDI with the seq driver. The seq driver is a dynamic bridge that sends and receives MIDI signals with JACK.

$ /usr/bin/jackd -R -d alsa -X seq

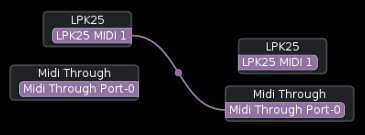

Launching jackd with seq defined as the MIDI driver, alsa as the audio driver, and, as always, the –realtime (or -R in shorthand) flag set, retains all ALSA MIDI ports and causes any JACK-aware application that you launch to create a JACK-aware MIDI port for itself.

When integrating MIDI from the physical world, remember the different types of MIDI ports. If you plug in a USB Midi controller (a piano keyboard with a USB port which sends MIDI signals) to your computer and you want to use it to play a software synth, then in most setups you will connect the MIDI controller to a systemwide Midi Thru port.

The MIDI Thru port transports your MIDI signals from the physical world into the MIDI Thru of the virtual studio so that all MIDI data you are generating, regardless of what channel it is destined for, gets passed on to all applications connected. In practise, this means that the seq bridge takes the MIDI data from your controller (coming in through the ALSA interface), and pipes it into the JACK environment via the System Capture MIDI interface (in other words, JACK sees your keypresses as a sort of MIDI-microphone; it captures the input data and makes it available to all JACK applications).

That's your MIDI setup, in a nutshell.

This has only covered the network design of MIDI. The individual clients (your synths, samplers, sequencers, and so on) still must be configured on a per-project basis so that they know what MIDI channel they should be responding to. The MIDI Thru is built in, because unlike hardware, you have infinite MIDI Out ports on your controller and sequencer.

Individual sections of Slackermedia always explain how to route MIDI on a per-application basis.

MIDI Driver for ALSA

If you are using a MIDI controller without JACK (ie, LMMS or Ardour in ALSA modes), then there is no configuration or routing required on a system level. Your MIDI signal is received by the ALSA MIDI driver, just by nature of how Linux accepts MIDI signals, and it is distributed to all MIDI-capable applications as a, more or less, universal MIDI Thru. As with JACK, it's up to you to set what channel each application responds to from within each application.

![An example of routing MIDI and sound with [[patchage]]. An example of routing MIDI and sound with [[patchage]].](/handbook/lib/exe/fetch.php?media=seqmidi.jpg)